Reflection on K-PAI’s Tenth Forum: The Human-Centric AI Revolution - From Technical Compliance to Humanistic Leadership

posted: 26-Aug-2025 & updated: 22-Jan-2026

Want to share this reflection? Use this link — https://k-privateai.github.io/seminar-reflections/10 — to share!

The achievement of 10 forums represents not just a numerical milestone but evidence of K-PAI’s successful evolution into an essential venue for Silicon Valley’s most important conversations about AI’s future. The quality of speakers, the depth of audience engagement, and the sophistication of the discussions demonstrate the forum’s unique value in fostering the kind of cross-disciplinary dialogue necessary for responsible AI development.

The 10th K-PAI Forum marked a pivotal evolution in Silicon Valley’s AI discourse—moving beyond technical implementation to explore how legal frameworks and humanistic principles can work together to create AI systems that truly serve humanity while meeting regulatory requirements.

The 10th Silicon Valley Private AI Forum (K-PAI), held on August 20, 2025, at Stanford University’s EVGR Theater, represented a landmark achievement for the K-PAI community. This milestone event, themed “The Human-Centric AI Revolution - From Technical Compliance to Humanistic Leadership,” brought together legal experts, AI developers, and visionary leaders to address one of the most pressing challenges of our time: how to build AI systems that are both legally compliant and genuinely human-centered.

Some media outlets

A Milestone Achievement

Reaching the tenth forum represents a significant achievement for K-PAI’s mission of fostering privacy-first AI innovation. The choice to focus on the intersection of legal compliance and humanistic leadership proved remarkably prescient, addressing the growing recognition that technical excellence alone is insufficient for responsible AI development. The collaboration between legal expertise from Quinn Emanuel and transformative leadership insights demonstrated K-PAI’s unique ability to bridge traditionally separate domains.

The Stanford venue provided an appropriate setting for this landmark discussion, reinforcing the academic rigor and intellectual depth that has become characteristic of K-PAI events. The continued strong attendance and engagement from Silicon Valley’s AI community underscores the forum’s established position as a premier venue for meaningful dialogue about responsible AI development.

Legal Foundations – Natalie Huh’s Regulatory Insights

Natalie Huh’s presentation on “From Intellectual Property to Data Scraping and Privacy – AI Regulatory Insights for Engineers” provided essential grounding in the legal realities facing AI developers. Her comprehensive coverage of intellectual property challenges, including the evolving standards for AI-generated inventions and the complexities of patent eligibility, offered crucial guidance for technical professionals navigating an increasingly complex regulatory landscape.

Particularly valuable were her insights into data scraping regulations and the practical implications. The distinction between publicly accessible data and legally permissible use represents a critical understanding gap that can have severe consequences for AI companies. Her emphasis on the growing importance of trade secrets as an alternative to patent protection for AI innovations reflects the pragmatic realities of current intellectual property law.

The presentation’s focus on practical implementation tips—from ensuring meaningful human contribution to inventive processes to establishing robust data governance frameworks—provided actionable guidance that bridges the gap between legal theory and engineering practice. This practical orientation exemplifies the kind of cross-disciplinary knowledge transfer that makes K-PAI forums uniquely valuable.

Humanistic Leadership – James Rhee’s Vision of Agency

James Rhee’s presentation on “Agency in a New World Order - AI and Humanism in Musical Counterpoint” introduced a fundamentally different paradigm for thinking about human-AI collaboration. The musical counterpoint metaphor—where different voices maintain their independence while creating harmony together—offers a compelling alternative to the dominant narrative of AI replacement or displacement.

The concept of “Agency” (주도성) provided a framework for understanding how human agency can be preserved and enhanced rather than diminished by AI systems. Rhee’s emphasis on agency as comprising intentionality, ownership of choices, competence, and adaptability offers concrete dimensions for evaluating human-AI interactions. The critical role of connectedness in preventing what he termed “warped agency” (narcissism, social isolation) highlights the importance of designing AI systems that enhance rather than undermine human relationships.

The red helicopter methodology’s integration of systems dynamics with creative processes presents an intriguing approach to organizational transformation. The emphasis on measurement, connectedness, and balance across dimensions of life, money, and joy suggests a holistic framework that could inform both AI system design and organizational implementation strategies.

The demonstration of Jennifer Kim Lin’s practical applications made these humanistic principles tangible, showing how abstract concepts of agency and counterpoint can be translated into functional tools and processes.

Bridging Technical & Humanistic Approaches

The forum’s most significant contribution was demonstrating how legal compliance and humanistic leadership can work synergistically rather than in tension. The Q&A session revealed sophisticated understanding among attendees of the practical challenges involved in implementing both regulatory requirements and ethical principles simultaneously.

The discussion highlighted several key integration points:

Accountability Frameworks – Legal compliance requires clear attribution of responsibility, while humanistic leadership demands genuine agency and empowerment. The integration of these requirements necessitates new organizational structures that distribute both authority and accountability appropriately. Participants identified specific mechanisms such as “agency audits” that assess both legal compliance and human empowerment outcomes, creating dual accountability systems where technical teams are responsible not just for performance metrics but for preserving human decision-making capacity.

Privacy as Enabler – Rather than viewing privacy requirements as constraints on AI development, the presentations framed privacy-preserving techniques as enablers of more sophisticated human-AI collaboration. This reframing has significant implications for how organizations approach compliance. For example, differential privacy techniques that protect individual data can simultaneously enable more authentic human feedback loops, creating AI systems that learn from human preferences without compromising individual autonomy.

Agency-Preserving Design – The concept of preserving human agency while leveraging AI capabilities provides practical design criteria that can satisfy both regulatory requirements and ethical imperatives. This emerged as a design philosophy where AI systems are evaluated not just for accuracy and efficiency, but for their impact on human competence, autonomy, and meaningful choice. Attendees discussed specific implementation approaches such as “explanatory interfaces” that meet legal transparency requirements while genuinely empowering users to understand and influence AI decisions.

Counterpoint Implementation – James Rhee’s musical metaphor translated into concrete technical practices during the discussion. Several attendees described “counterpoint architectures” where AI systems are designed to complement rather than replace human capabilities, maintaining distinct roles while achieving harmonious outcomes. This approach satisfies regulatory requirements for human oversight while genuinely enhancing rather than diminishing human agency.

Value Alignment Through Law – The presentations revealed how legal frameworks can serve as practical mechanisms for embedding humanistic values in AI systems. Rather than viewing regulation as external constraint, attendees explored how legal requirements for fairness, transparency, and human oversight can be implemented through technical architectures that inherently respect human dignity and agency.

Emerging Themes and Industry Implications

Several critical themes emerged from the evening’s discussions, each with profound implications for how Silicon Valley and the broader AI industry approaches development, deployment, and governance:

The Evolution of AI Governance

The forum demonstrated a fundamental maturation in thinking about AI governance, moving beyond the traditional adversarial relationship between regulation and innovation toward what several attendees termed “symbiotic governance.” This evolution reflects a growing recognition that regulatory frameworks, when thoughtfully designed, can actually accelerate responsible innovation rather than constrain it.

The discussion revealed three distinct phases in AI governance evolution:

- Phase 1 – Reactive compliance - treating regulation as external constraints to be minimized

- Phase 2 – Proactive compliance - anticipating regulatory requirements and building them into development processes

- Phase 3 – Governance-enabled innovation - using regulatory frameworks as design principles that enhance rather than limit AI capabilities

Industry implications include the emergence of new roles such as “AI Constitutionalists” who specialize in translating legal requirements into technical architectures, and the development of “regulatory-native” AI systems designed from inception to exceed compliance requirements while maximizing human benefit.

Practical Ethics Implementation

The presentations moved ethical AI discourse from philosophical abstraction toward operational concreteness. James Rhee’s agency framework and Natalie Huh’s compliance roadmaps provided what participants described as “ethics with implementation pathways” - specific practices that organizations can adopt immediately.

This practical turn has several industry implications:

Measurable Ethics – Organizations are beginning to develop “agency metrics” alongside traditional performance indicators. Companies like those represented at the forum are experimenting with “human empowerment scores” that track whether AI deployments increase or decrease human autonomy, competence, and meaningful choice.

Ethics-Driven Architecture – The counterpoint metaphor is inspiring new architectural patterns where AI systems are designed with “human agency preservation” as a primary design constraint, not an afterthought. This includes developing AI interfaces that explicitly enhance rather than replace human decision-making capabilities.

Compliance as Competitive Advantage – Forward-thinking organizations are beginning to view deep ethical compliance not as cost centers but as sources of sustainable competitive advantage, particularly in B2B markets where clients increasingly demand evidence of responsible AI practices.

Cross-Disciplinary Collaboration as Strategic Necessity

The successful integration of legal expertise and humanistic leadership insights at the forum reflects a broader industry recognition that AI development can no longer be dominated by purely technical considerations. The evening demonstrated that cross-disciplinary collaboration is evolving from “nice to have” to strategic necessity.

New Organizational Structures – Companies are experimenting with “integrated development teams” that include legal, ethical, and humanistic expertise from project inception rather than consulting these perspectives only during review phases. Some organizations are creating “Chief Agency Officers” whose role is ensuring that AI deployments enhance rather than diminish human capabilities.

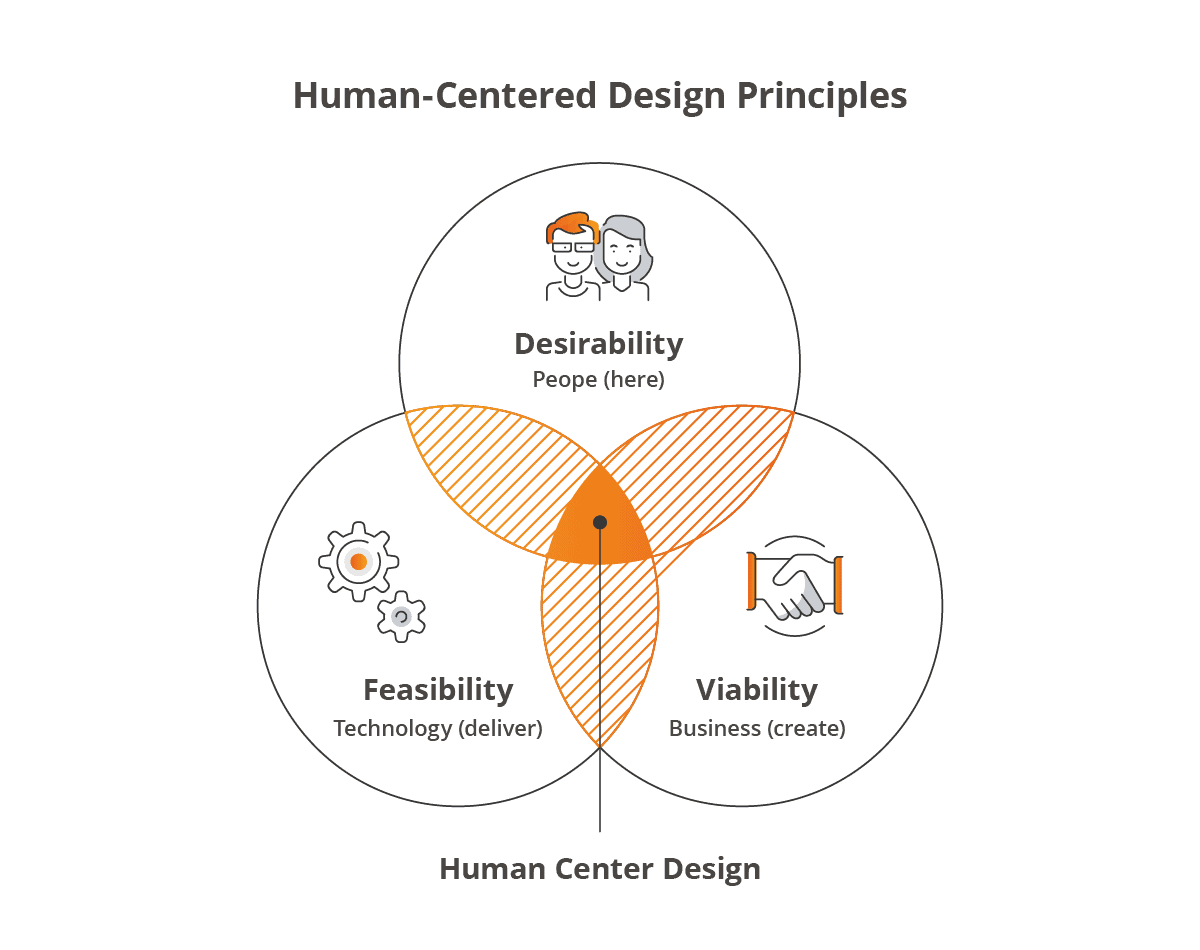

Educational Implications – The forum’s success suggests growing demand for “T-shaped professionals” who combine deep technical expertise with meaningful literacy in law, ethics, and human-centered design. Several attendees mentioned their organizations’ investments in cross-disciplinary training programs.

Partnership Evolution – The industry is seeing the emergence of new partnership models between technology companies, law firms, and humanistic organizations. These partnerships go beyond traditional client-service relationships toward genuine collaborative development of AI systems that satisfy technical, legal, and ethical requirements simultaneously.

Human-Centric Design as Market Differentiator

The emphasis on preserving and enhancing human agency is beginning to emerge as a genuine market differentiator rather than merely a compliance requirement. Consumer and enterprise buyers are increasingly sophisticated in evaluating AI systems based on their impact on human autonomy and empowerment.

Consumer Awareness Evolution – End users are becoming more discerning about AI systems that diminish versus enhance their capabilities. This is driving demand for “agency-positive” AI that makes humans more capable rather than more dependent.

Enterprise Adoption Criteria – B2B customers are developing procurement criteria that explicitly evaluate AI systems’ impact on employee agency, skill development, and job satisfaction. Organizations are recognizing that AI systems that demoralize or de-skill their workforce create long-term competitive disadvantages.

Talent Attraction and Retention – Top AI talent increasingly wants to work on projects that align with humanistic values. Organizations that can demonstrate genuine commitment to human-centric AI development are finding significant advantages in recruiting and retaining the best technical professionals.

The Convergence of Privacy and Agency

A particularly significant theme was the recognition that privacy-preserving AI technologies and human agency enhancement are not just compatible but mutually reinforcing. This convergence has profound implications for how the industry approaches both technical development and market positioning.

Technical Synergies – Privacy-preserving techniques like federated learning and differential privacy inherently preserve human control over data, which directly supports human agency. This alignment suggests that privacy-first AI development naturally leads to more human-centric outcomes.

Regulatory Alignment – The forum revealed how privacy regulations like GDPR and emerging AI governance frameworks create consistent incentives for human-centric design. Organizations that excel at privacy preservation often find compliance with human-centric AI requirements more straightforward.

Market Positioning – Companies are beginning to market their privacy-preserving AI capabilities not just as security features but as human empowerment tools. This reframing opens new market opportunities and customer relationships.

The Institutionalization of AI Ethics

The sophistication of the forum’s discussions reflected the ongoing institutionalization of AI ethics within Silicon Valley organizations. This is moving beyond individual champions or voluntary initiatives toward systematic organizational capabilities.

Systematic Capability Building – Organizations are developing institutional capabilities for ethical AI development, including specialized roles, dedicated budgets, and integration with core business processes. The forum’s attendee list reflected this trend, with many participants holding titles like “AI Ethics Lead” or “Responsible AI Director.”

Standards and Certification Evolution – The industry is moving toward standardized approaches for evaluating and certifying human-centric AI systems. Several forum participants mentioned ongoing work on “agency preservation standards” that could become industry benchmarks.

Supply Chain Integration – Large technology companies are beginning to require human-centric AI practices from their suppliers and partners, creating market incentives that extend ethical AI practices throughout the technology ecosystem.

Global Implications and Cultural Translation

While the forum focused primarily on Silicon Valley perspectives, the discussions revealed awareness of global implications and the need for cultural translation of human-centric AI principles.

Cultural Adaptation – The concept of “agency” requires thoughtful translation across different cultural contexts. Organizations operating globally are developing frameworks for implementing human-centric AI that respects cultural differences in autonomy, decision-making, and human-technology relationships.

Regulatory Harmonization – The forum’s international attendance reflected growing recognition that responsible AI development requires coordination across regulatory jurisdictions. Participants discussed the emergence of “global AI governance networks” that coordinate standards and practices across borders.

Development Model Export – Silicon Valley’s evolution toward human-centric AI development is beginning to influence AI development practices in other global technology hubs, creating opportunities for knowledge sharing and collaborative development of responsible AI practices.

Areas for Future Exploration

While the forum provided excellent foundations, several areas warrant deeper investigation:

International Regulatory Harmonization – The discussion focused primarily on U.S. legal frameworks, but global AI deployment requires understanding how different regulatory approaches can be reconciled.

Measurement and Assessment – While the humanistic leadership framework provides conceptual clarity, developing practical metrics for assessing agency preservation and enhancement remains challenging.

Organizational Transformation – Implementing these approaches requires significant organizational change capabilities that many technology companies may lack.

Scalability Challenges – The highly personalized nature of agency-preserving design may create challenges for large-scale AI deployment.

Key Takeaways for AI Development

The forum yielded several actionable insights for AI practitioners:

Legal-First Design – Incorporating legal requirements from the earliest stages of AI system design rather than treating compliance as an afterthought.

Agency Assessment – Developing systematic approaches for evaluating how AI systems impact human agency and autonomy.

Holistic Frameworks – Moving beyond narrow technical optimization toward integrated approaches that consider legal, ethical, and humanistic dimensions.

Community Engagement – Recognizing that responsible AI development requires ongoing dialogue with diverse stakeholders rather than isolated technical development.

Looking Forward

The 10th K-PAI Forum established a foundation for the kind of integrated thinking necessary for navigating AI’s complex future. The successful bridging of legal and humanistic perspectives suggests that the traditional silos between technical, legal, and ethical considerations can be transcended.

As we look toward future K-PAI events, the challenge will be building on these foundations to develop even more sophisticated approaches to responsible AI development. The forum’s emphasis on practical implementation suggests that future events might focus on case studies and detailed examples of successful integration.

The achievement of 10 forums represents not just a numerical milestone but evidence of K-PAI’s successful evolution into an essential venue for Silicon Valley’s most important conversations about AI’s future. The quality of speakers, the depth of audience engagement, and the sophistication of the discussions demonstrate the forum’s unique value in fostering the kind of cross-disciplinary dialogue necessary for responsible AI development.

Conclusion

The 10th K-PAI Forum successfully demonstrated that the apparent tension between technical compliance and humanistic leadership is false—that properly designed AI systems can satisfy legal requirements while preserving and enhancing human agency. The integration of Natalie Huh’s regulatory expertise with James Rhee’s humanistic framework provided a compelling vision for AI development that serves both legal and ethical imperatives.

The musical counterpoint metaphor offers a particularly powerful framework for thinking about human-AI collaboration. Rather than viewing AI as either a tool or a replacement for human capabilities, the counterpoint model suggests genuine partnership where different capabilities complement each other while maintaining their distinct characteristics.

As K-PAI continues to evolve, the foundations established by this tenth forum will undoubtedly inform future discussions about privacy-preserving AI, regulatory compliance, and human-centered design. The success of this milestone event reinforces K-PAI’s position as Silicon Valley’s premier forum for responsible AI innovation.

The human-centric AI revolution is not about choosing between technological advancement and human values—it is about creating systems where both can flourish together in counterpoint, each maintaining their essential characteristics while creating something greater than either could achieve alone.

The 10th K-PAI Forum marked a pivotal evolution in Silicon Valley’s AI discourse—moving beyond technical implementation to explore how legal frameworks and humanistic principles can work together to create AI systems that truly serve humanity while meeting regulatory requirements.